There are plenty of different methods which allow a programmer to grab a bunch of pseudo random numbers which take into account the accuracy of truly randomised values vs. speed. CUDA and thrust parallel primitives offer a variety of host and device API methods to generate random numbers, but also provide a good insight into the processing speed comparison vs. the CPU.

So, as more of a technical experiment to start observing the real performance differences, I started running some basic tests on arbitrary size data containers using four different methods (the first three use host API calls). I’ll go through each one and display their respective performance difference:

- thrust::generate on the host

- thrust primitives with counting_iterators and transform on the device

- cuRand’s Pseudo Random Generator on the device

- Using cuRand’s device API with curandStates to initialize separate CUDA kernels

thrust::generate is a very simple example of using the CPU to quickly fill a container with random numbers and can practically be summarised in a few lines, where _rand is a host function which simply sets the limits on the calculation:

__host__ static __inline__ float _rand()

{

return (0.0 + (float)(rand())/((float)(RAND_MAX/(1.0f - 0.0f))));

}

thrust::host_vector<float> h_vec(100, 0); //creating 100 random numbers

thrust::generate(h_vec.begin(), h_vec.end(), _rand);

//force swap to clear

h_vec.clear();

thrust::host_vector<float>().swap(h_vec);

Pretty simple, the point of showing this is to be able to compare the speed of this method to the other three GPU based implementations.

The second is slightly more complicated, but is from what I can see the easiest way of creating a device vector of random numbers using the GPU without using curand. Here, we use a tranformation of counting iterators to calculate a value per element of the rng sequence. Basically each counting iterator is fed into an op which discards the iterator amount from a created thrust::default_random_engine to avoid correlation and applys a distribution (if needed). I’ve found the need to explicitly set the execution policy with the first parameter thrust::device for the transform, else you tend to get some crazy results.

int _seed = rand(); thrust::device_vector<float> d_data(100); //100 floats thrust::counting_iterator<unsigned int> index_sequence_begin(_seed); thrust::transform(thrust::device, index_sequence_begin, index_sequence_begin + (100), d_data.begin(), psrngen(0.0f, 1.0f)); //force swap to clear d_data.clear(); thrust::device_vector<float>().swap(d_data);

The operator in this case can be defined with our own structure, hence the choice of an applied uniform distribution in this implemention.

struct psrngen

{

__host__ __device__ psrngen(float _a, float _b) : a(_a), b(_b) {;}

__host__ __device__ float operator()(const unsigned int n) const

{

thrust::default_random_engine rng;

thrust::uniform_real_distribution<float> dist(a, b);

rng.discard(n);

return dist(rng);

}

float a, b;

};

This method gives a the ability to easily change the limits and way the random numbers are created, however as you will see below, a simple curand implemention will do the same.

With the third method, just as before, we create a pseudo_random_generator (this time using curand), pass it a randomly generated seed and leave it down to the basic wrapped API methods to handle the generation. The function names are pretty self explanatory, with curandGenerateUniform ‘s default limits being between 0.0f and 1.0f. checkCudaErrors & CURAND_CALL are basic checks for the status returns.

float *deviceData; curandGenerator_t gen; srand(time(NULL)); int _seed = rand(); //allocate space for 100 floats on the GPU //could also do this with thrust vectors and pass a raw pointer checkCudaErrors(cudaMalloc((void **)&deviceData, sizeof(float) * 100)); CURAND_CALL(curandCreateGenerator(&gen, CURAND_RNG_PSEUDO_DEFAULT)); CURAND_CALL(curandSetPseudoRandomGeneratorSeed(gen, _seed)); CURAND_CALL(curandGenerateUniform(gen, deviceData, 100)); //generate the random numbers CURAND_CALL(curandDestroyGenerator(gen)); checkCudaErrors(cudaFree(deviceData)); deviceData = NULL;

The last way I’m going to show is by using curand device API on kernels. Here, we need to allocate space for each individual kernels curandState as well as the device container for the random results. We can then initialize the kernels we are going to use to a particular curandState (the state of the random number generator) by calling curand_init with a given seed and different subsequence value to create a different starting position per kernel. You have the ability here to generate the same sequences for each thread by giving curand_init the same subsequence and offset values!

It’s then a simple case of launching the initialized kernels again ( to avoid calling curand_init) and using the wrapper functions to generate either pseudorandom or quasi random numbers. I’m using curand_uniform to generate floats between 0.0f and 1.0f as before. You can copy the generator state used in local memory for fast generation, and store it back in global memory between kernel launches.

srand(time(NULL));

//naively setting the threads per block and block per grid sizes, where 100 is the amount of rngs

int threadsPerBlock = 1024;

int nBlocks = 100/threadsPerBlock + 1;

//alocate space for each kernels curandState

curandState* deviceStates;

checkCudaErrors(cudaMalloc(&deviceStates, 100*sizeof(curandState)));

//call curand_init on each kernel with the same random seed

//and init the rng states

initialise_curand_on_kernels<<<nBlocks, threadsPerBlock>>>(deviceStates, unsigned(time(NULL)));

getLastCudaError("initialise_curand_on_kernels failed");

cudaSyncAndCheck();

//allocate space for the device container of rns

float* d_random_floats;

checkCudaErrors(cudaMalloc((void**) &d_random_floats, sizeof(float)* 100));

//calculate per element of the container a rn

set_random_number_from_kernels<<<nBlocks, threadsPerBlock>>>(d_random_floats, deviceStates, 100);

getLastCudaError("set_random_number_from_kernels failed");

cudaSyncAndCheck();

//cleanup

checkCudaErrors(cudaFree(d_random_floats));

checkCudaErrors(cudaFree(deviceStates));

The init kernel only requries a call to curand_init:

__global__ void initialise_curand_on_kernels(curandState * state, unsigned long seed)

{

int idx = blockIdx.x*blockDim.x+threadIdx.x;

curand_init(seed, idx, 0, &state[idx]);

}

And the generator essentially sets up and calls one of curand’s distributors:

__device__ float generate(curandState* globalState, int ind)

{

//copy state to local mem

curandState localState = globalState[ind];

//apply uniform distribution with calculated random

float rndval = curand_uniform( &localState );

//update state

globalState[ind] = localState;

//return value

return rndval;

}

__global__ void set_random_number_from_kernels(float* _ptr, curandState* globalState, const unsigned int _points)

{

int idx = blockIdx.x*blockDim.x+threadIdx.x;

//only call gen on the kernels we have inited

//(one per device container element)

if (idx < _points)

{

_ptr[idx] = generate(globalState, idx);

}

}

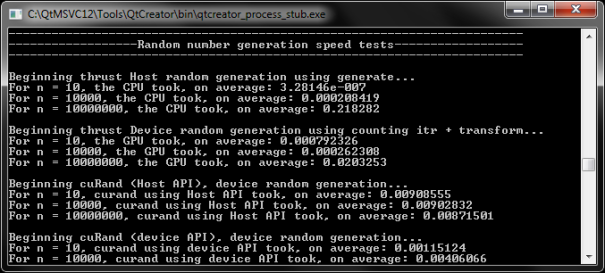

Now the interesting part; how long do each of these methods take to perform for a variety of random numbers! The test cases I have run always apply a uniform distribution and generate numbers between 0.0f and 1.0f using the corresponding API methods for different container sizes (10, 10000, 10000000) and take an average time of 100 calls to each case. Bear in mind that the data in all these GPU examples is being left on the device, and the timings do not account for device->host overhead if it is needed. There is an issue I’m facing for larger amounts using the device API, I think due to the naive way I’m launching the kernels, so I’ve only managed to provide results for n= 10 and 10000 for this method. I’m using the timer code I previously uploaded to monitor these.

The allocation and processing speed of the CPU will pretty much always be faster with smaller container sizes, bearing in mind this could be paralyzed even further (by using OpenMP for example), but the main limitation of this is it does require a halt to the translation unit. I’m uncertain how thrust deals with the device generation, however for smaller and decently sized container sizes it seems to perform really well. cuRand’s host API will almost always guarantee a result dependant on your hardware and generator type, the container size does not deviate the processing speed much due to the way it optimizes the generation on the device, however you can get better performance even on this by using the device API. In general, you will get the best performance from cuRand by generating containers of random numbers that are as large as possible. The docs on the cuRand library are really good and the examples worth looking at for more info.

Leave a comment